DeepSeek, the Chinese language AI lab that not too long ago upended trade assumptions about sector growth prices, has launched a brand new household of open-source multimodal AI fashions that reportedly outperform OpenAI’s DALL-E 3 on key benchmarks.

Dubbed Janus Professional, the mannequin ranges from 1 billion (extraordinarily small) to 7 billion parameters (close to the dimensions of SD 3.5L) and is obtainable for speedy obtain on machine studying and information science hub Huggingface.

The most important model, Janus Professional 7B, beats not solely OpenAI’s DALL-E 3 but additionally different main fashions like PixArt-alpha, Emu3-Gen, and SDXL on trade benchmarks GenEval and DPG-Bench, in line with info shared by DeepSeek AI.

Its launch comes simply days after DeepSeek made headlines with its R1 language mannequin, which matched GPT-4’s capabilities whereas costing simply $5 million to develop—sparking a heated debate in regards to the present state of the AI trade.

The Chinese language startup’s product has additionally triggered sector-wide considerations it might upend incumbents and knock the expansion trajectory of main chip producer Nvidia, which suffered the most important single-day market cap loss in historical past on Monday.

DeepSeek’s Janus Professional mannequin makes use of what the corporate calls a “novel autoregressive framework” that decouples visible encoding into separate pathways whereas sustaining a single, unified transformer structure.

This design permits the mannequin to each analyze pictures and generate pictures at 768×768 decision.

“Janus Professional surpasses earlier unified mannequin and matches or exceeds the efficiency of task-specific fashions,” DeepSeek claimed in its launch documentation. “The simplicity, excessive flexibility, and effectiveness of Janus Professional make it a robust candidate for next-generation unified multimodal fashions.”

Not like with DeepSeek R1, the corporate didn’t publish a full whitepaper on the mannequin however did launch its technical documentation and made the mannequin out there for speedy obtain freed from cost—persevering with its apply of open-sourcing releases that contrasts sharply with the closed, proprietary method of U.S. tech giants.

So, what’s our verdict? Nicely, the mannequin is very versatile.

Nevertheless, don’t count on it to exchange any of probably the most specialised fashions you’re keen on. It could generate textual content, analyze pictures, and generate images, however when pitted in opposition to fashions that solely do a type of issues properly, at greatest, it’s on par.

Testing the mannequin

Be aware that there isn’t any speedy method to make use of conventional UIs to run it—Comfortable, A1111, Focus, and Draw Issues aren’t suitable with it proper now. This implies it’s a bit impractical to run the mannequin regionally and requires going via textual content instructions in a terminal.

Nevertheless, some Hugginface customers have created areas to strive the mannequin. DeepSeek’s official house will not be out there, so we advocate utilizing NeuroSenko’s free house to strive Janus 7b.

Concentrate on what you do, as some titles could also be deceptive. For instance, the Area run by AP123 says it runs Janus Professional 7b, however as a substitute runs Janus Professional 1.5b—which can find yourself making you lose quite a lot of free time testing the mannequin and getting dangerous outcomes. Belief us: we all know as a result of it occurred to us.

Visible understanding

The mannequin is nice at visible understanding and may precisely describe the weather in a photograph.

It confirmed a very good spatial consciousness and the relation between totally different objects.

It is usually extra correct than LlaVa—the most well-liked open-source imaginative and prescient mannequin—being able to offering extra correct descriptions of scenes and interacting with the consumer based mostly on visible prompts.

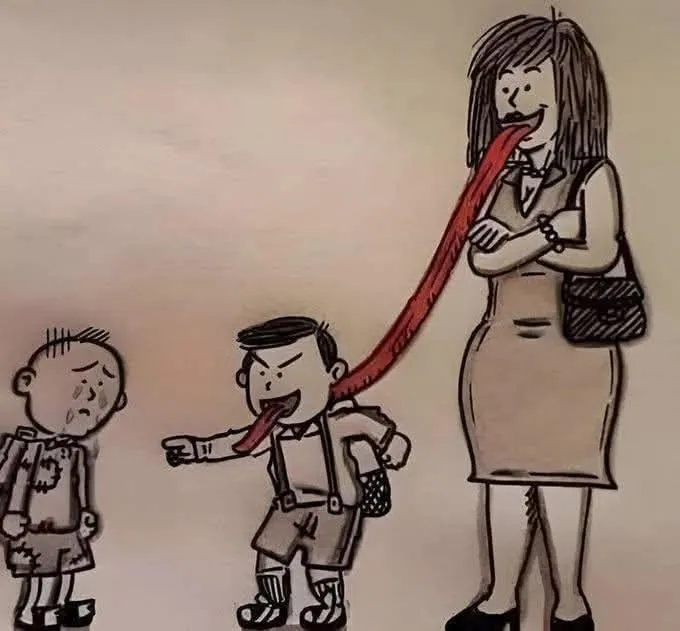

Nevertheless, it’s nonetheless not higher than GPT Imaginative and prescient, particularly for duties that require logic or some evaluation past what is clearly being proven within the photograph. For instance we requested the mannequin to research this photograph and clarify its message

The mannequin replied, “The picture seems to be a humorous cartoon depicting a scene the place a girl is licking the tip of a protracted crimson tongue that’s hooked up to a boy.”

It ended its evaluation by saying that “the general tone of the picture appears to be lighthearted and playful, probably suggesting a state of affairs the place the girl is participating in a mischievous or teasing act.”

In these conditions the place some reasoning is required past a easy description, the mannequin fails more often than not.

Then again, ChatGPT, for instance, truly understood the that means behind the picture: “This metaphor means that the mom’s attitudes, phrases, or values are instantly influencing the kid’s actions, significantly in a damaging method resembling bullying or discrimination,” it concluded—precisely, we could add.

A league of its personal

Picture era seems strong and comparatively correct, although it does require cautious prompting to realize good outcomes.

DeepSeek claims Janus Professional beats SD 1.5, SDXL, and Pixart Alpha, however it’s vital to emphasise this have to be a comparability in opposition to the bottom, non fine-tuned fashions.

In different phrases, the truthful comparability is between the worst variations of the fashions presently out there since, arguably, no person makes use of a base SD 1.5 for producing artwork when there are lots of of high quality tunes able to reaching outcomes that may compete in opposition to even state-of-the-art fashions like Flux or Steady Diffusion 3.5.

So, the generations are in no way spectacular when it comes to high quality, however they do appear higher than what SD1.5 or SDXL used to output once they launched.

For instance, here’s a face-to-face comparability of the pictures generated by Janus and SDXL for the immediate: A cute and lovely child fox with massive brown eyes, autumn leaves within the background enchanting, immortal, fluffy, shiny mane, Petals, fairy, extremely detailed, photorealistic, cinematic, pure colours.

Janus beats SDXL in understanding the core idea: it might generate a child fox as a substitute of a mature fox, as in SDXL’s case.

It additionally understood the photorealistic fashion higher, and the opposite components (fluffy, cinematic) have been additionally current.

That stated, SDXL generated a crisper picture regardless of not sticking to the immediate. The general high quality is best, the eyes are real looking, and the small print are simpler to identify.

This sample was constant in different generations: good immediate understanding however poor execution, with blurry pictures that really feel outdated contemplating how good present state-of-the-art picture turbines are.

Nevertheless, it is vital to notice that Janus is a multimodal LLM able to producing textual content conversations, analyzing pictures, and producing them as properly. Flux, SDXL, and the opposite fashions aren’t constructed for these duties.

So, Janus is much extra versatile at its core—simply not nice at something when in comparison with specialised fashions that excel at one particular job.

Being open-source, Janus’s future as a frontrunner amongst generative AI lovers will rely upon a slew of updates that search to enhance upon these factors.

Edited by Josh Quittner and Sebastian Sinclair

Usually Clever Publication

A weekly AI journey narrated by Gen, a generative AI mannequin.